Introduction

I began working with Professor Christopher Clark in the Lab for Autonomous and Intelligent Robotics (LAIR) in the fall of my sophomore year as a member of the Shark Tracking team.

The LAIR had over 10 student researchers working on four major projects and several individual topics. When I joined, the Shark Tracking team consisted of two other Mudd students (Yukun Lin and Hannah Kastein) and me. During my tenure, Richard Piersall and Rowan Zellers joined the team while Hannah left.

This project is described briefly on the LAIR Projects page (click on AUV Shark Tracking on the left hand side). For an idea of where the project stood before I joined on, check out this abc7 News story about a test-deployment to Catalina Island the summer before I joined on. To see what was going on in the LAIR in the summer of 2013, check out this post on the HMC admissions blog. One of our Catalina field deployments during the summer was written up by the Orange County Register.

My main job on the team was improving the control algorithms on the robots to enable them to more intelligently and efficiently follow the sharks. In addition to that, I worked to improve the hardware on the robots.

One of the awesome things about this project was getting up close and personal with a lot of sharks. The below video is a compilation of some of the footage we captured at Catalina Island in the summer of 2013.

Overview

The shark tracking project is a cooperative effort between the LAIR and the CSU Longbeach Shark Lab. Professor Chris Lowe (I know, another Chris…), who heads up the shark lab, is interested in collecting high-resolution positioning data on sharks and other fish. This data is an important tool for maintaining fish populations and their habitat. In other words, by figuring out where sharks go and why, we can better protect them and the marine ecosystem.

Unfortunately, the current methods of tracking are not sufficient: GPS tracking tags do not transmit underwater; stationary arrays of sensors only work if the tagged animal is inside the boundaries of the array; and manual tracking is labor-intensive and expensive. As such, this project aims to develop a means of autonomous active tracking.

The problem boils down to the following: given position data for the robot and a set of sensor measurements, determine the position of the shark.

Now, that might not sound too difficult at first, but let me assure you that it is. It is very difficult. The problem involves a large number of variables and some significant technological limitations.

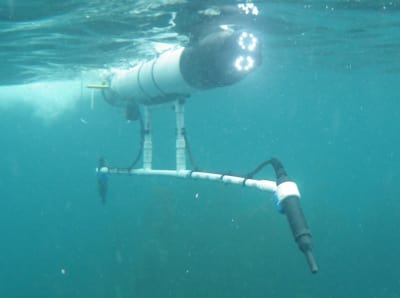

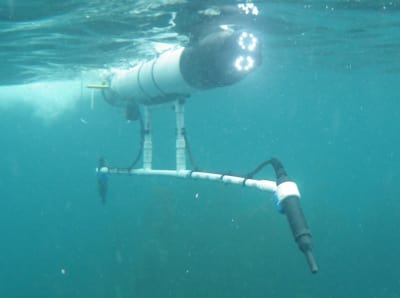

This project makes use of two OceanServer Iver2 AUVs. The Iver2 AUV is a torpedo-shaped robot, that has a rear propeller for propulsion and four fins to control pitch and yaw. The sensor payload includes a 3-DOF (degree of freedom) compass, wireless antenna, GPS receiver, and Doppler Velocity Logger.

The AUVs communicate with each other, as well with a top-side modem, via a Woods Hole Oceanographic Institution Micro-Modem and externally mounted transducers. Each AUV is outfitted with a Lotek MAP600RT receiver and an associated stero-hydrophone set, designed to listen for acoustic signals at a frequency of 76 kHz.

These allow the vehicles to receive transmissions from Lotek MM-M-16-50-PM acoustic tags, which send a transmission at 76.8 kHz every two seconds.

The Lotek MapHost software associated with the receiver records the tag ID, time of detection, signal strength, pressure, and presence of motion. This information and the separation of the hydrophones allows the angle between the AUV and the acoustic tag to be calculated.

There are several challenges associated with state estimation given the sensor measurements. As indicated above, a shark located at to the left of the robot will generate the same measurement as one located at the corresponding angle on the rate. In addition, the stereo-hydrophone system generates an angle-to-tag measurement with a resolution of approximately 10 degrees. Furthermore, the rate at which an angle measurement is obtained is highly dependent on the acoustic environment; time intervals of up to 30 seconds without new measurements are common. To make things better, even with distance measurements to the shark, the actual state of the shark cannot be determined from the signal data alone.

To determine where the shark actually is, we use something called Monte Carlo Localization (MCL). In this technique, estimates of the target’s location are represented by a set of “particles”. Each particle has an associated weight that corresponds to how likely it is.

Initially, particle states are assigned randomly within a bounded area centered on the robot.

At each time step afterwards, particles are propagated according to a stochastic motion model that simulates shark motion.

Afterwards, the weights of the particles are recalculated based on sensor measurements.

The estimated shark state is then taken to be the average of all estimates. A new set of particles is then culled from the current set, with preference to the most probably ones.

Software

Framework

Much of my first few months on the team was spent simply understanding the code-base and how all of the sub-systems worked and interacted. The pre-existing control code was especially difficult to understand. Due to the pressures of debugging in the field and the lack of a pre-existing framework to work in, the majority of the code ended up being inside the GUI used to set up missions!

I decided that we needed to set up a framework for the shark tracking code in order to make it more modular, extensible, and maintainable. My initial plan was to use something like ROS or MOOS, but neither worked with Windows (which was required for our system). Furthermore, none of the other frameworks I could find would work for our use. As such, I chose to implement a custom framework based on the publish-subscribe principle.

To that end, I divided up our system into two interfaces and five subsystems:

- User Interface (UI): this could take the form of a GUI or a console application

- Shark Tracking Interface (STI): this serves as an abstraction layer for all of the shark tracking code and as a broker for implementing publish-subscribe. This makes it so the UI can’t directly access any of the shark tracking code, which keeps everything modular.

- Localization : responsible for figuring out where the shark is relative to the Iver based on sensor data

- Controller : directs the Iver’s motion based on its current position, the position of the other robot, and the estimated position of the shark

- Database : stores all sensor and state estimation data along with relevant control variables. Grouping the data in this way makes it easy to generate comprehensive and up-to-date log files.

- Intra-AUV Communications : each Iver contains two processors. The primary processor runs proprietary code which interfaces with the sensors and actuators on the robot. The secondary processor runs our code. This module is responsible for ensuring that the two processors share relevant data.

- Inter-AUV Communications : given that this is a multi-robot system, it is advantageous to have each robot know what the other is up to

A graphical overview of the interactions between each subsystem can be seen below. Note that all connections are two-way.

Because each subsystem and the UI only interfaces with the STI, modules can be readily swapped out. As well, it is easy for team members to work on separate parts of the code without worrying about stepping on each others’ feet.

In all, re-factoring the entire code-base resulted in a much more comprehensible, robust, and modular system that was far easier to work with going forward.

Tracking System

The goal of the tracking system is to localize on a shark and then remain close to it. At the same time, however, we don’t want to alter its behavior, so the robot should keep its distance. To that end, the system currently uses a behavior-based controller.

When the robot is close to where it thinks the shark is, it will circle that point at a safe distance. Once its estimate of the shark’s position has moved appreciably, it will then head directly for that new position and start circling once it gets close.

The robot is currently constrained to a bounded area; it is not allowed to circle or target a point outside of this boundary. The boundary was set in order to ensure the robot did not collide with the dock or any of the boats and so that it avoided the large kelp beds closer to shore (not shown in the background image). In the future, we hope to incorporate additional sensors to allow the Iver to avoid obstacles itself, such that the boundary would become largely unnecessary.

A visualization of a test run (one Iver tracking a tagged boat) is shown below. This is an excellent example of how the system works because we have truth data for the positions of the boat and the AUV (both have GPS locators on them). The meaning of each of the features in the video is as follows:

- As the legend indicates, the red dot is the estimated position of the target (in this case a boat), the yellow dot is the actual position of the boat, and the purple dot is the AUV.

- The blue circle around the AUV is the current range estimate to the target.

- The two blue cones emanating from the AUV are the current angular position estimates to the target. These are calculated using data collected from the hydrophones by Lotek’s proprietary software.

- The dotted green line is the designated operating region of the robot (set in the code using GPS coordinates for the corners).

As you can see, the AUV’s estimate of the position is quite accurate and consistent. This is rather remarkable given the poor quality of the data and low sampling rate (typically we’ll get one valid sample every 10-15 seconds, but one sample per three seconds is the maximum). This localization is Yukun’s focus in the project. For more information on calculating distance and the localization techniques used, check out our paper from the 2013 UUST conference: Using Time of Flight Distance Calculations for Tagged Shark Localization [PDF].

As for my controller, you can see that it does remain correctly bounded and switches its circling point when the target has moved sufficiently.

AUV Cooperation

In order to maximize the probability of detecting a shark, the quantity of information obtained, and the quality of said information, it is advantageous to use multiple AUVs.

In our system, when both AUVs are deployed, they share information via an acoustic modem. This information is used to supplement their own sensor inputs for localization purposes and to coordinate motion (to avoid collisions and to ensure varied sensor vantage points).

The below long-exposure shows a pair of AUVs coordinating to circle a set point.

The below animation shows how they do this more explicitly. The data used in the animation was collected during field testing at Catalina Island in the summer of 2012.

Both AUVs are tracking a common circle, then later depart to track a separate circle. To avoid collisions, the two robots are at different radii and leave the circle at different times (note that in the current algorithm, the AUVs depart off the closest point of the circle, rather than the furthest). To ensure varied sensor vantage points, the two AUVs modulate their speed to remain opposite one another.

Hardware

In addition to working on software, I design and manufacture new hardware. In particular, I have redesigned the hydrophone mounting hardware and retrofitted the kort nozzle and rudder.

Note that pictures of the new system will be added around the third week of January when I regain access to the lab.

Hydrophone Mounting Hardware

In order to get useful localization data, the hydrophones must be offset from the body of the Iver by approximately 0.4 meters and at a spacing of 2.4 meters. The offset from the body of the Iver is to avoid interference from the propeller and ensure that the sensors remained submerged during surface runs; the spacing is necessary to get adequate angular resolution.

The old system made use of foam padding and hose clamps to hold a single-piece PVC frame to the Ivers. The hydrophones and their cables were then taped to the frame.

This system was bulky (both to transport and hydrodynamically), took forever to set up or tear down, and looked terrible.

I ended up machining mounting brackets to attach the hydrophone frame to the AUVs’ racks. I then mocked up a modular frame out of PVC as a prototype. This frame was able to break down into a small bundle that was much easier to transport than the previous frame. To make tape unnecessary, I designed mounting brackets for the hydrophones and clips to attach the cables to the frame. To top it all off, Hannah shortened up the cables.

Previously, assembling the frame took close to 15 minutes of fumbling around and a headache. With the prototype system, it was as simple as threading together a few parts, sliding the hydrophones in place and tightening them down, then snapping the cord into the holders. Not only is it a lot easier and less painful, it’s less wasteful too!

One thing that I forgot to consider was that removing all of the foam previously in the mounting system drastically affected the buoyancy of the Ivers. The first time we tested out the new mounting system, the Iver sunk! Fortunately, it was only in a few feet of water, so we didn’t lose it. But it was in Phake Lake in the Bernard Field Station and that water is gross, so I wasn’t too happy about having to dive in after it. Afterwards, we were able to take out some of the ballast and the Ivers achieved neutral buoyancy once again.

Unfortunately, the current prototype system is not sufficient for extended use. While the cord holders are durable enough for continued use, the 3D printed hydrophone holders and PVC frame have not held up well. Repeatedly tightening and loosing the screws in the holders eventually causes them to fall apart; the joints on the PVC frames are not flush, making them a major stress riser that will fail if the Ivers are transported with the frames attached (the bouncing motion is too much for the PVC to withstand).

That said, work is in progress to replace the 3D printed parts with machined ones and the PVC frame with a carbon fiber one.

Kort Nozzle and Rudder

I also modified the existing rudders in order to be able to attach the long absent kort nozzles and thereby improve the speed and efficiency of the Ivers. In order to attach them, I also had to manufacture new support struts for the nozzles - the old ones were bent out of shape during transport on an earlier mission.

Publications

Recent Work

- Using Time of Flight Distance Calculations for Tagged Shark Localization with an AUV [PDF] (I co-authored this paper)

- A Multi-AUV State Estimator for Determining the 3D Position of Tagged Fish [PDF] (I co-authored this paper)

Previous Work

- Tracking and Following of a Tagged Leopard Shark with an Autonomous Underwater Vehicle [PDF]

- A Multi-AUV System for Cooperative Tracking and Following of Leopard Sharks [PDF]

- Tracking of A Tagged Leopard Shark with an AUV: Sensor Calibration and State Estimation [PDF]

- Multi-Robot Control for Circumnavigation of Particle Distributions [PDF]

Misc. Photos